LIBSVM — A Library for Support Vector Machines

Posted by Armando Brito Mendes | Filed under software

LIBSVM — A Library for Support Vector Machines

Chih-Chung Chang and Chih-Jen Lin

Version 3.17 released on April Fools’ day, 2013. We slightly adjust the way class labels are handled internally. By default labels are ordered by their first occurrence in the training set. Hence for a set with -1/+1 labels, if -1 appears first, then internally -1 becomes +1. This has caused confusion. Now for data with -1/+1 labels, we specifically ensure that internally the binary SVM has positive data corresponding to the +1 instances. For developers, see changes in the subrouting svm_group_classes of svm.cpp.

Version 3.17 released on April Fools’ day, 2013. We slightly adjust the way class labels are handled internally. By default labels are ordered by their first occurrence in the training set. Hence for a set with -1/+1 labels, if -1 appears first, then internally -1 becomes +1. This has caused confusion. Now for data with -1/+1 labels, we specifically ensure that internally the binary SVM has positive data corresponding to the +1 instances. For developers, see changes in the subrouting svm_group_classes of svm.cpp.

We now have a nice page LIBSVM data sets providing problems in LIBSVM format.

We now have a nice page LIBSVM data sets providing problems in LIBSVM format.

A practical guide to SVM classification is available now! (mainly written for beginners)

A practical guide to SVM classification is available now! (mainly written for beginners)

LIBSVM tools available now!

LIBSVM tools available now!

We now have an easy script (easy.py) for users who know NOTHING about svm. It makes everything automatic–from data scaling to parameter selection.

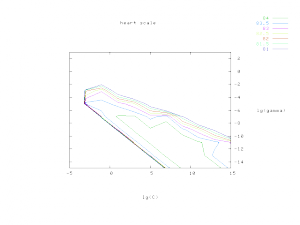

The parameter selection tool grid.py generates the following contour of cross-validation accuracy. To use this tool, you also need to install python and gnuplot.

Tags: captura de conhecimento, data mining, otimização, R-software, RapidMiner, WEKA

Cross-validation in RapidMiner

Posted by Armando Brito Mendes | Filed under software

Cross-validation is a standard statistical method to estimate the generalization error of a predictive model. In -fold cross-validation a training set is divided into

equal-sized subsets. Then the following procedure is repeated for each subset: a model is built using the other

subsets as the training set and its performance is evaluated on the current subset. This means that each subset is used for testing exactly once. The result of the cross-validation is the average of the performances obtained from the

rounds.

This post explains how to interpret cross-validation results in RapidMiner.